From there we'll start building it out to increase its capabilities probably over a few parts revisiting this as time goes by.

For now in this part we'll keep things simple with a standard grid and a single tiled texture for a height field and the next part we'll look into increasing the level of detail depending on distance to the camera. In future parts we'll dive deeper into techniques to extend the map by swapping height textures and looking at how we can apply different terrain textures.

Adding structure to our shader matrices

Before we dive into our height field there is one structural change I made to the project and that is adding a number of access methods for our shaderMatrices structure and enhancing this structure to contain matrices that are calculated from our projection, view and model matrices.

As a result the structure is now considered to be read only and most members considered private. To set our projection, view and model matrices we call respectively shdMatSetProjection, shdMatSetView and shdMatSetModel.

Doing so resets appropriate flags inside the structure to indicate which calculated matrices need updating.

While the projection, view and model matrices can be read directly, the calculated matrices are accessed through the following functions:

- shdMatGetInvView to get an inverse of the view matrix

- shdMatGetEyePos to get the position of the eye/camera

- shdMatGetModelView to get our model/view matrix

- shdMatGetInvModelView to get the inverse of our model/view matrix

- shdMatGetMvp to get our model/view/projection matrix

- shdMatGetNormal to get our normal matrix (world space)

- shdMatGetNormalView to get our normal matrix (view space)

As a result this structure is now passed to our render logic with a pointer.

Our height map

To create a height field we could load up a 3D plane already adjusted for height and just render it but it would be very inefficient. Either it would need to be immensely large and bog down your GPU or you'd need to constantly update the vertex positions and waist a lot of bandwidth.

With our vertex shaders however there is much simpler solution. We can use a texture to indicate the height at a specific point on our map and adjust the vertices that way. Here is the texture I'm currently using. It is a tilable map I grabbed off of google images.

This map is not ideal for a real height field implementation but for our example it will do fine. First of all its too small. It's only a 225x225 and covers only a small area before it starts repeating. Especially once we add our automatic level of detail adjustment we'll need a higher detail for our maps.

The other issue is that it is a gray scale RGB image and it really only makes sense to use one color channel in our shader. This limits the precision of our height and wastes alot of memory.

OpenGL is able to load single channel 32bit floating point images that would give us the precision we need. The code doesn't change other then loading a different type of image so for our goal today, this limitation isn't an issue.

To communicate which texture map we are using as our height map I've added a new member to our shaderStdInfo structure called bumpMapId and a texture map object called bumpMap to our material structure.

BumpMap may be a name that isn't completely fitting but as I don't want to increase my structures with too many unnecessary members I figured I'd only need one member that I can use as a heightmap/bumpmap/normalmap type thing as we start writing more shaders.

While not yet used I've also enhanced our texture map class to retain dimension information about the images that was loaded.

Creating the object we'll render

It was tempting to not use an object at all and do everything in our vertex shader but I figured this would be overkill and just waste GPU cycles. Instead I've added a simple method to our mesh3d library called meshMakePlane:

bool meshMakePlane(mesh3d * pMesh, int pHorzTiles, int pVertTiles, float pWidth, float pHeight) {

int x, y, idx = 0;

float posY = -pHeight / 2.0;

float sizeX = pWidth / pHorzTiles;

float sizeY = pHeight / pVertTiles;

for (y = 0; y < pVertTiles+1; y++) {

float posX = -pWidth / 2.0;

for (x = 0; x < pHorzTiles+1; x++) {

// add our vertice

vertex v;

v.V.x = posX;

v.V.y = 0.0;

v.V.z = posY;

v.N.x = 0.0;

v.N.y = 1.0;

v.N.z = 0.0;

v.T.x = posX / pWidth;

v.T.y = posY / pHeight;

meshAddVertex(pMesh, &v);

if ((x>0) && (y>0)) {

// add triangles

meshAddFace(pMesh, idx - pHorzTiles - 2, idx - pHorzTiles - 1, idx);

meshAddFace(pMesh, idx - pHorzTiles - 2, idx, idx - 1);

};

posX += sizeX;

idx++;

};

posY += sizeY;

};

return true;

};

This simply creates a mesh for a flat 3D surface of a given size with a given number of tiles. It also sets up texture coordinates and normals but we will be ignoring those.In our load_objects function we're setting up a mesh3d object for this plane. Note that I backtracked on my previous post and am no longer adding our skybox to our scene and neither will I add our height field to our scene. Instead they are rendered separately after the whole scene has rendered.

Our height field is initialized using this function:

void initHMap() {

material * mat;

mat = newMaterial("hmap"); // create a material for our heightmap

mat->shader = &hmapShader; // texture shader for now

matSetDiffuseMap(mat, getTextureMapByFileName("grass.jpg", GL_LINEAR, GL_REPEAT, false));

matSetBumpMap(mat, getTextureMapByFileName("heightfield.jpg", GL_LINEAR, GL_REPEAT, true));

hMapMesh = newMesh(102 * 102, 101 * 101 * 3 * 2);

strcpy(hMapMesh->name, "hmap");

meshSetMaterial(hMapMesh, mat);

meshMakePlane(hMapMesh, 101, 101, 101.0, 101.0);

meshCopyToGL(hMapMesh, true);

// we can release these seeing its now all contained within our scene

matRelease(mat);

};

Note that we have a new shader called hmapShader which we've loaded alongside our other shaders. I'll discuss the shader itself in a minute.We load two texture maps, a diffuse map which will be the texture that we draw our surface with and our bump map. Note that both are setup as repeating textures (tiled).

Note also that our tiles are 1.0x1.0 sized and we have 101x101 tiles in our surface. We'll scale this up in the vertex shader as needed.

We then render our surface in our engineRender function like so:

if (hMapMesh != NULL) {

// our model matrix is ignore here so we don't need to set it..

matSelectProgram(hMapMesh->material, &matrices, &sun);

meshRender(hMapMesh);

};

Note also the extra glDisable(GL_BLEND) after we render our scene as the last meshes we rendered would have been our transparent ones.Our shaders

Now that we've got our object that needs to be rendered its time to have a look at our shaders.

Let's talk about the fragment shader first because it is nearly identical to our texture map shader. I've left out specular highlighting and simplified the input parameters but there really isn't much point at having a closer look as we've discussed texture mapping in detail before.

The magic happens in our vertex shader so let's have a look at that one part at a time:

#version 330 layout (location=0) in vec3 positions; uniform vec3 eyePos; // our eye position uniform mat4 projection; // our projection matrix uniform mat4 view; // our view matrix uniform sampler2D bumpMap; // our height map out vec4 V; out vec3 N; out vec2 T;There is little to discuss about our inputs and outputs, all that is new here is that we ignore many of the inputs we had in our standard vertex shader and that we have a sampler for our bumpmap.

float getHeight(vec2 pos) {

vec4 col = texture(bumpMap, pos / 10000.0);

return col.r * 1000.0;

}

This is where the fun starts, above is a function that takes a real world X/Z coordinate, adjusts it, looks it up in our height map and scales the "red" channel up. With red ranging from 0.0 to 1.0 which translates to 0 to 255 we thus get a height between 0.0 (black) and 1000.0 (red/white) with a precision of roughly 3.9 per color increase.

The scale by which we divide our coordinates defines how large an area our height map covers. Remember that in texture coordinates we're going from 0.0 to 1.0 so at 1.0 we're at position 225 on our tiny texture map. With larger texture maps we thus need to increase the amount we divide by to covert the intended area.

vec3 calcNormal(vec2 pos) {

vec3 cN;

cN.x = getHeight(vec2(pos.s-10.0,pos.t)) - getHeight(vec2(pos.s+10.0,pos.t));

cN.y = 20.0;

cN.z = getHeight(vec2(pos.s,pos.t-10.0)) - getHeight(vec2(pos.s,pos.t+10.0));

return normalize(cN);

}

The function up above will calculate the normal for our surface at a given point. Here what we do is get the height slightly to the left of our location and slightly to the right to calculate a gradient. We do the same in our z direction (which is y on our map). This is a quick and dirty and not super accurate method of calculating our normal but it will do for the time being.

Now its time to look at the main body of the shader:

void main(void) {

// get our position, we're ignoring our normal and texture coordinates...

V = vec4(positions, 1.0);

// our start scale, note that we may vary this depending on distance of ground to camera

float scale = 1000.0;

// and scale it up

V.x = (V.x * scale);

V.z = (V.z * scale);

}

The bit above is pretty simple and straight forward, we get the position of the vertex we're handling with and then scale it up. Our 1.0x1.0 tiles have thus become 1000.0x1000.0 tiles. Note that we only scale the x and z members of our vector. While multiplying our y isn't a problem, multiplying w will cause some funky issues.

// Use our eyepos defines our center. Use our center size. V.x = V.x + (int(eyePos.x / scale) * scale); V.z = V.z + (int(eyePos.z / scale) * scale);Now this is the first part of the magic, we always want to center our surface to our camera but aligned to our tiles or else we get some really funky wave effect on our surface. We thus take our eye position, divide it by our scale, floor it, and scale it back up.

// and get our height from our hmap. V.y = getHeight(V.xz);So now it's time to call our height function to adjust our V as we now know our real world position of our vertex. The nice thing here is that we can start scaling and modifying our surface to our hearts content, for a given world space coordinate we'll always get the right height.

// calculate our normal. N = calcNormal(V.xz); N = (view * vec4(N+eyePos, 1.0)).xyz;Here we call our calcNormal function to calculate our normal. We add in our eye position as a trick to be able to apply our view matrix as is. Basically the view matrix would translate the vertex by the inverse of our camera position making the total movement 0 and we have a nicely rotated normal.

// and use our coordinates as texture coordinates in our frament shader T = vec2(V.x / 2000.0, V.z / 2000.0);Calculating our texture coordinate becomes a simple scale as well. We generally want to have our texture cover a much smaller area then our height map hence the lower number even though our texture map is much larger then our height map.

// our on screen position by applying our view and projection matrix V = view * V; gl_Position = projection * V; }Last but not least we need to apply our view and projection matrix. We store our position adjusted by the view matrix in V as our output for our lighting.

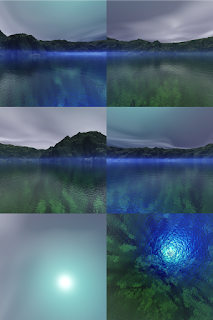

The end result is this:

Note that I've added z/c as controls to move the camera forward/backwards and f to toggle wireframe. I've also adjusted our joystick control provided you have a gamepad type controller as I'm using the second stick for movement.

Download the source here

So where from here?

The example works well but when you start playing with it you'll quickly see its limitations. First off the detail in the ground is very course. The tiles are much to large, it looks like an old vector game from the mid 90ies :) The other issue is that when you move the camera up eventually the surface becomes small enough that the edges are very clearly visible.

There are two things we can do to combat this before we start using tessellation (which we'll talk about in the next part) especially when needed as a fall back if you're hardware doesn't support tessellation shaders.

- The first we've already briefly mentioned and that is to up the scale as the camera increases height. Just like with horizontally adjusting our position based on camera movement it is important we increment this in fixed blocks or you'll end up with a wave effect as you smoothly move vertices of your height field accross your height map

- The second is 'prebaking' some of the LOD in our mesh. Instead of all tiles having a uniform size of 1.0 we could start increasing the size of tiles as we move away from the center of our map. This is harder then it seems because you could again end up with a wave effect if you don't think this out properly but it is well worth the effort

Finally having a never ending field of grass is fun but not very practical. What we want is to have a number of different textures for different terrains and blend from one texture to another. For this we need to introduce a 3rd set of maps that serve as indices into which texture map needs to be used and come up with a nice way to blend from one to the other. Another topic we'll look at in the near future.